Stretch Community News - October/November 2023

Welcome to the Hello Robot monthly community update!

We’ve had a great time getting to meet so many community members at IROS and CORL recently. Thank you for stopping by to say hello! This update, we have open-source imitation learning in the home, a massive open dataset, and new publications on perception and learning.

Read on for details of the great work being done with Stretch. If you’d like to see your work featured in a future newsletter or on the Stretch Community Repo, please let us know!

DOBB-E, an open-source general-purpose system for learning manipulation tasks in the home, was released by Prof. Lerrel Pinto’s team at NYU in collaboration with Meta. Collecting data with a simple handheld tool, Stretch learned to complete over 100 tasks in ten real homes in only twenty minutes each! Try it now!

Researchers from over 30 institutions released the Open X-Embodiment Dataset, the largest open-source real robot dataset to date. It contains 1M+ real robot trajectories spanning 22 robot embodiments, with Stretch as one of the most represented mobile manipulators.

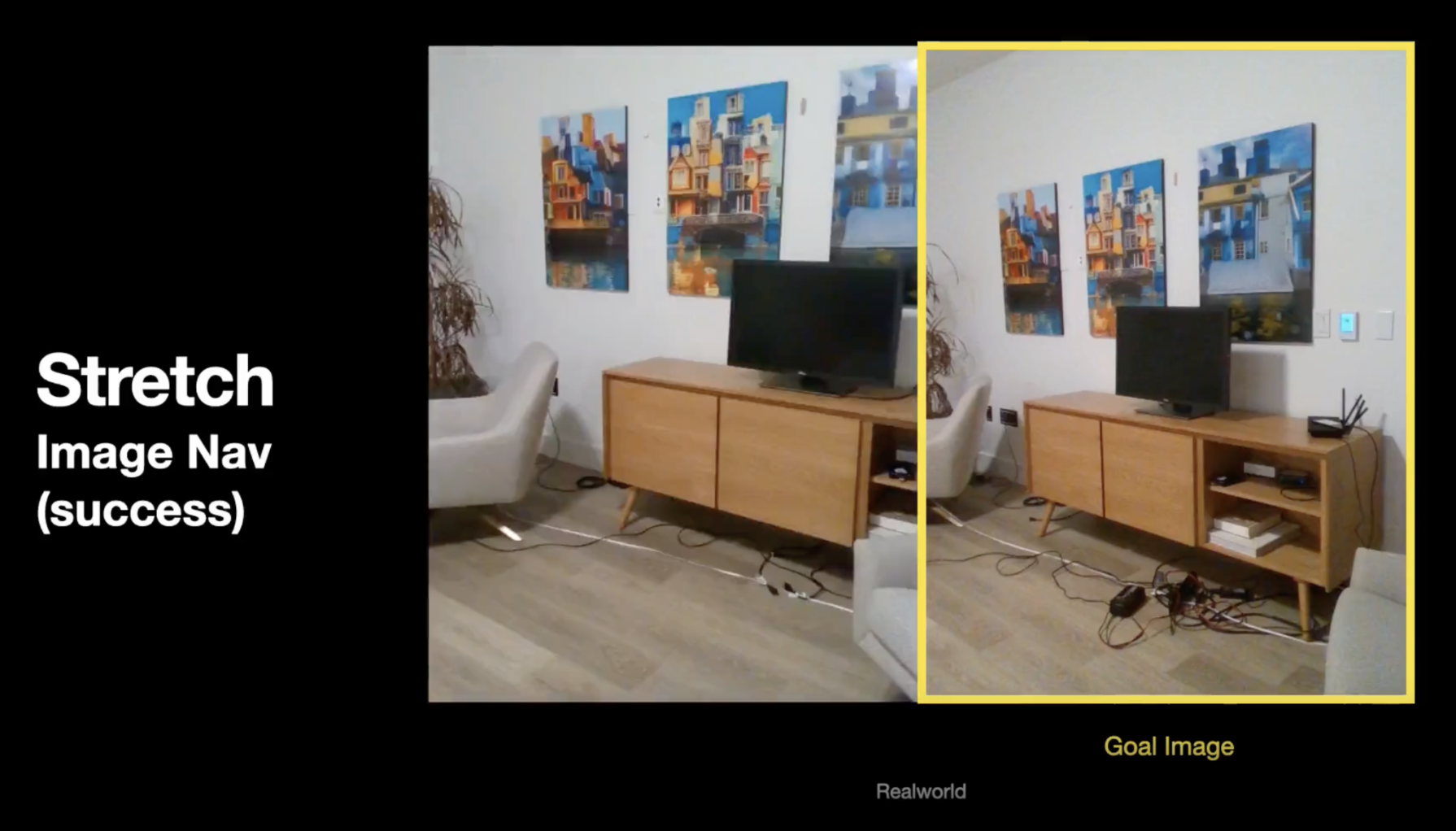

Pretrained Visual Representations (PVRs) are a promising development towards general-purpose visual perception for robotics. New work from Meta AI, Georgia Tech, and Stanford studied the sim-to-real performance of PVRs on hardware platforms, using Stretch for an image goal navigation task and achieving a first of its kind zero-shot success rate of 90%.

Shelf-picking robots need to efficiently identify and handle a diverse range of items, but edge cases present a real risk of failure. University of Washington’s Prof. Maya Cakmak is putting humans in the loop to aid robots like Stretch in particularly challenging grasping tasks.

Could you sneak up on a robot? A new Georgia Tech paper explored an audio-only person detection model that can passively find and track a person even when they attempt to move quietly.

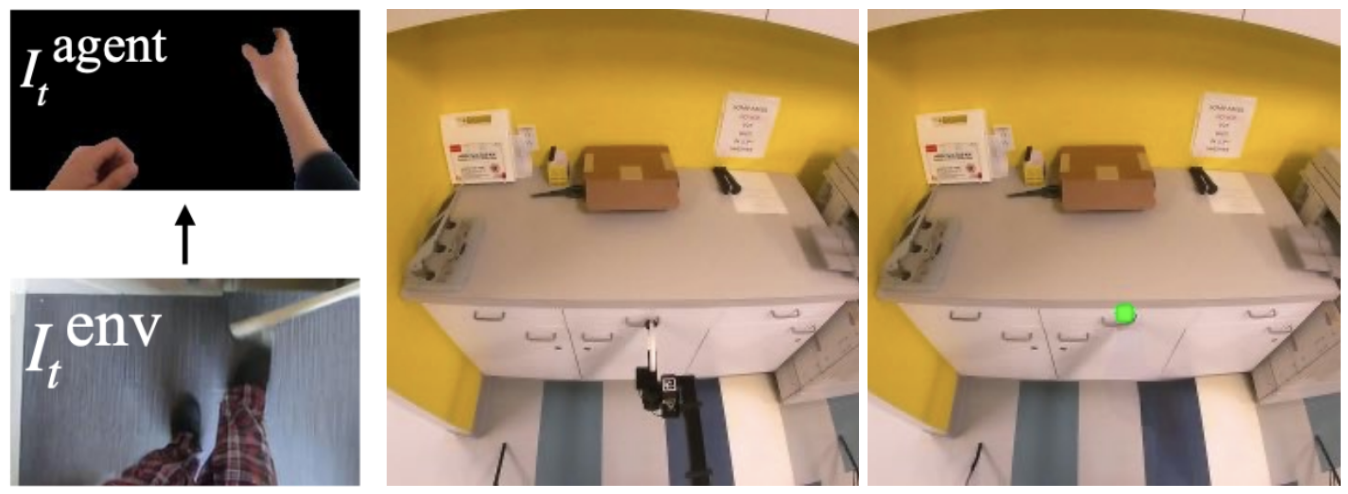

Learning reward functions from egocentric video can be complicated by mismatch between the human hand and robot end-effector. New UIUC work proposes video inpainting via diffusion model (VIDM) to separate the agent and environment, using video to train Stretch to open drawers.