Stretch Community News - January 2026

Happy New Year!

The team here at Hello Robot hopes you had a wonderful holiday season and wishes you all the best for 2026!

This month’s Stretch Community News features exciting new work from across the Stretch community, including recent research presented at IROS, CoRL, as well as impactful real-world deployments. These projects explore open-vocabulary navigation and manipulation, dynamic memory and scene understanding, faster visual-language navigation, and robust reasoning in changing environments. We’re also thrilled to see Stretch leaving the lab and exploring applications in precision agriculture and in-home assistive robotics for dementia care.

Read on for more details! And if you’d like your work featured in a future newsletter, we’d love to hear from you. Drop us a line at: community@hello-robot.com.

Cheers,

Aaron Edsinger

CEO - Hello Robot

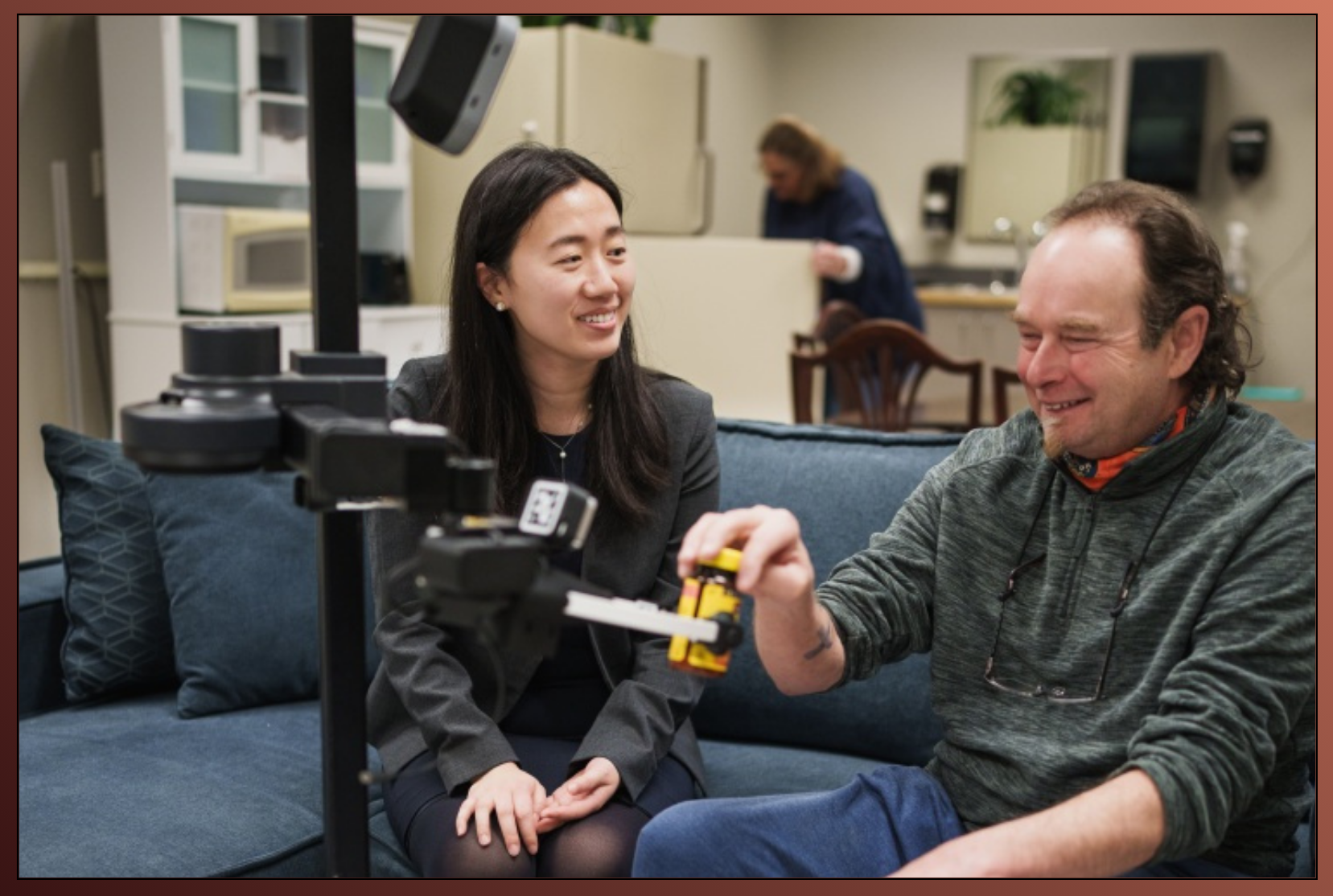

Researchers at the University of New Hampshire have begun testing a socially assistive robot in the homes of families caring for loved ones with dementia, moving this work from the lab into real-world settings. The robot combines onboard intelligence with smart home sensors to provide reminders, safety monitoring, and personalized support tailored to individual needs. This home-based testing marks an important step toward helping people with dementia age in place while easing the day-to-day burden on caregivers.

LagMemo is a new visual navigation system, from researchers at Peking University, that allows robots to flexibly navigate to multiple goals using open-vocabulary, multi-modal instructions. The system builds a 3D language-aware memory during exploration, which it later queries to predict and verify goal locations in real time as tasks are issued. Evaluations on a newly curated benchmark show that LagMemo outperforms existing methods, demonstrating more flexible and reliable navigation in complex, real-world environments.

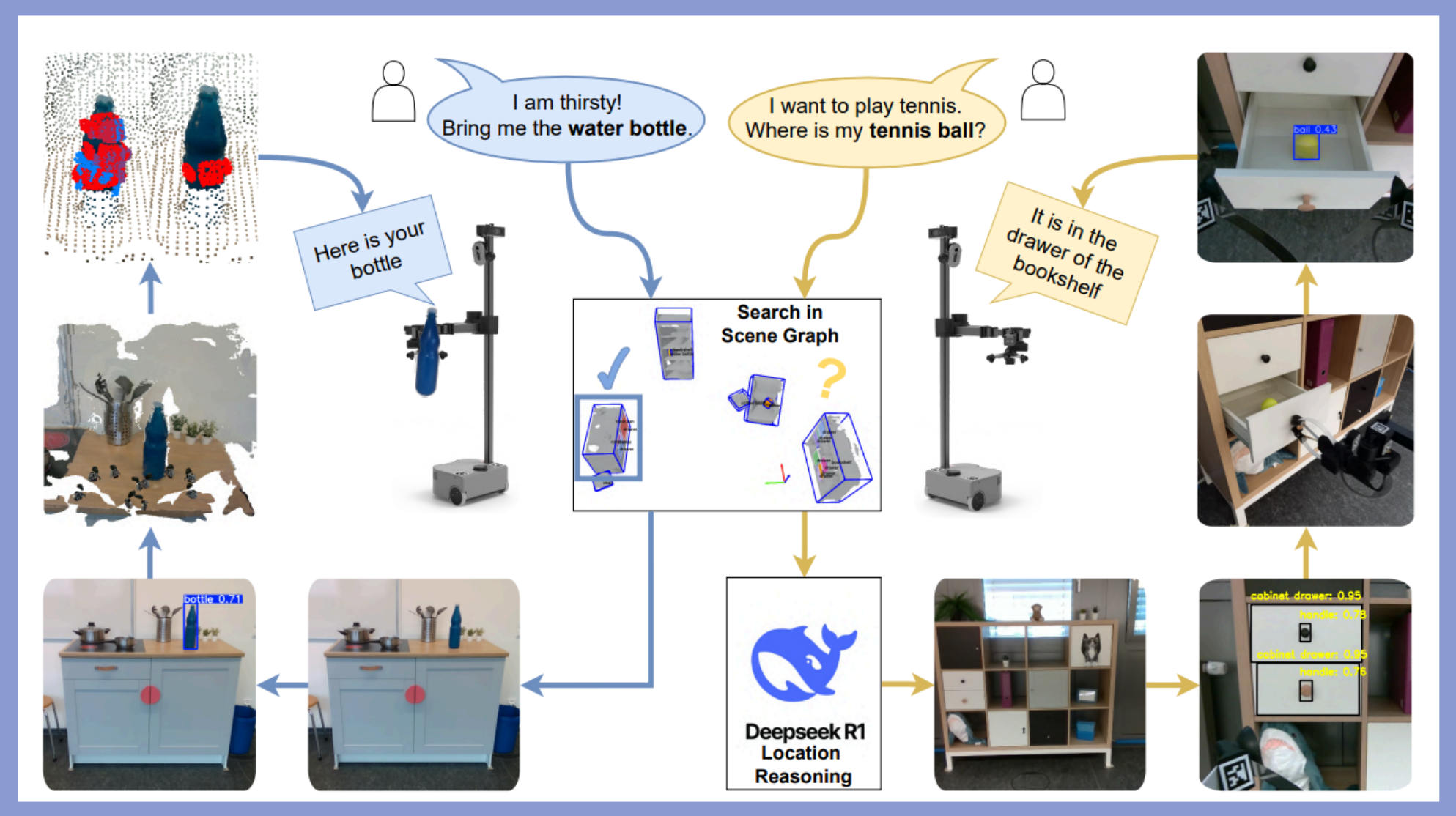

Researchers at the University of Bonn in Germany introduce a new approach that enables robots to locate objects even when they’ve been moved or hidden, such as inside drawers or cabinets, using only an outdated map. By combining spatial awareness, semantic knowledge of common object locations, and geometric reasoning, the system searches more efficiently and avoids infeasible locations. Tested on the Stretch SE3 mobile manipulator, the method achieved reliable navigation and detection while reducing hidden-space search time by 68%, supporting more practical and capable robots for everyday environments.

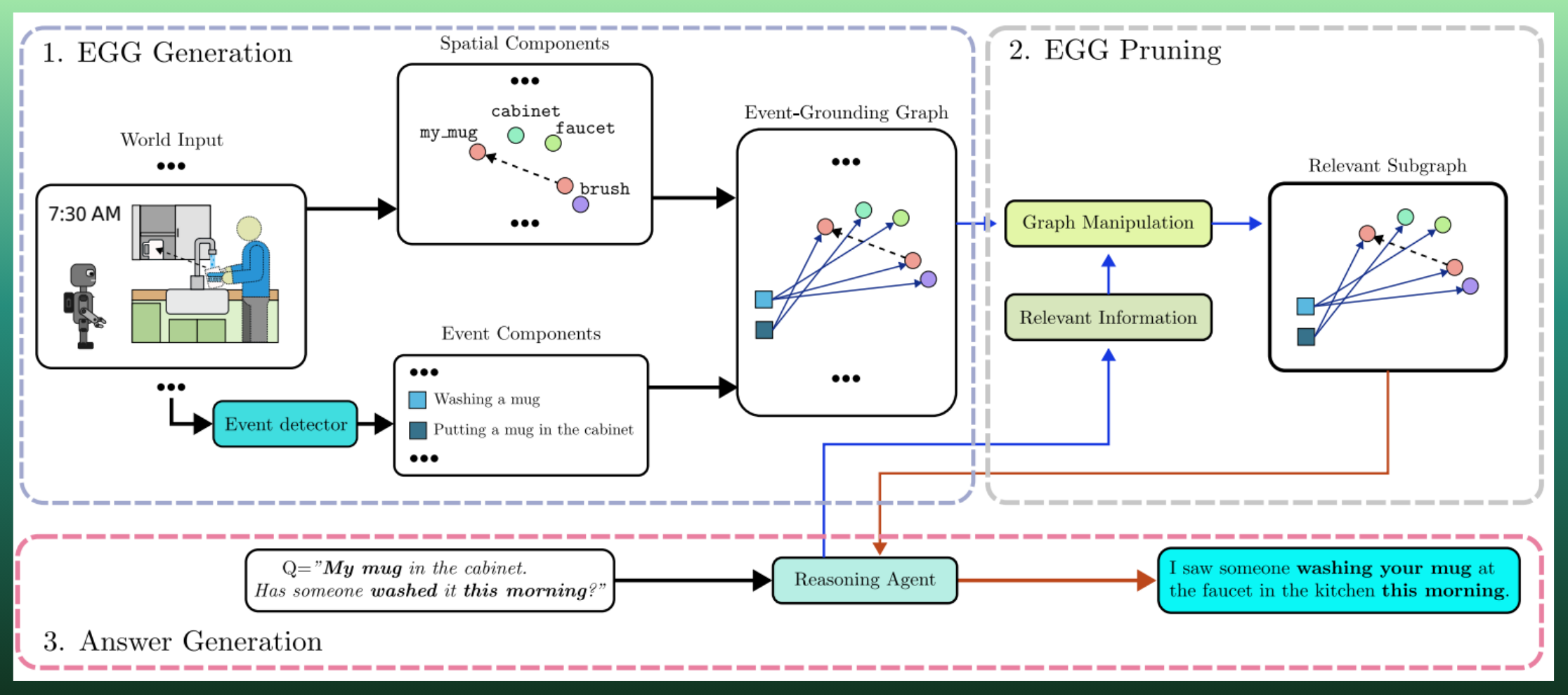

A new scene representation, created by researchers at Aalto University in Finland, called the Event-Grounding Graph, helps robots connect objects in their environment with the actions and events happening around them. By grounding events directly to spatial features, EGG enables robots to better understand, reason about, and answer questions involving both scenes and activities. Real-world robot experiments show that this representation improves a robot’s ability to retrieve relevant information and respond accurately to human queries, with the framework released as open source for the community.

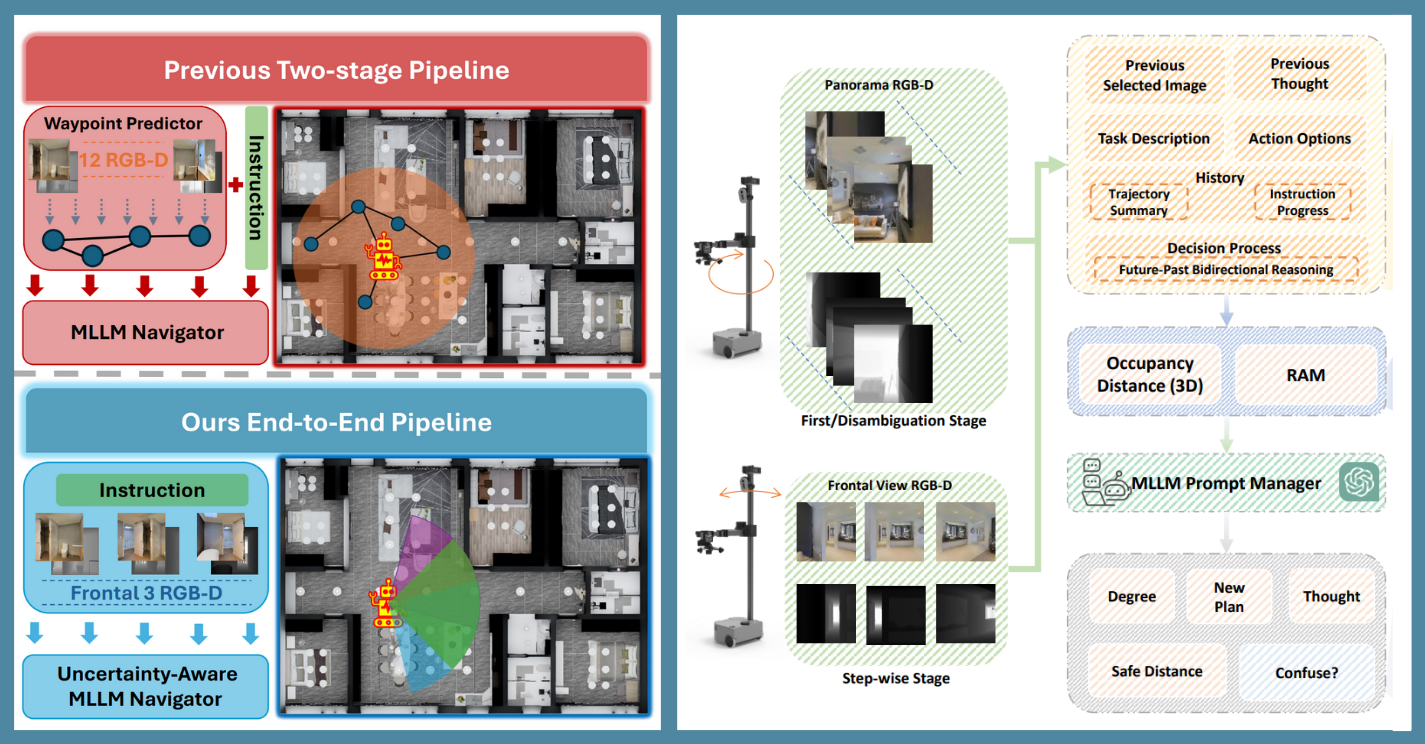

Fast-SmartWay, created by researchers from the University of Adelaide and the Swiss Federal Institute of Technology Lausanne, introduces a faster and more practical approach to zero-shot visual-language navigation for real-world robots. By relying on just three frontal RGB-D images and natural language instructions, the system removes the need for panoramic views and intermediate waypoint prediction, allowing actions to be generated end-to-end. Tests in both simulation and real-robot environments show faster decision-making with performance that matches or exceeds existing panoramic-based methods.

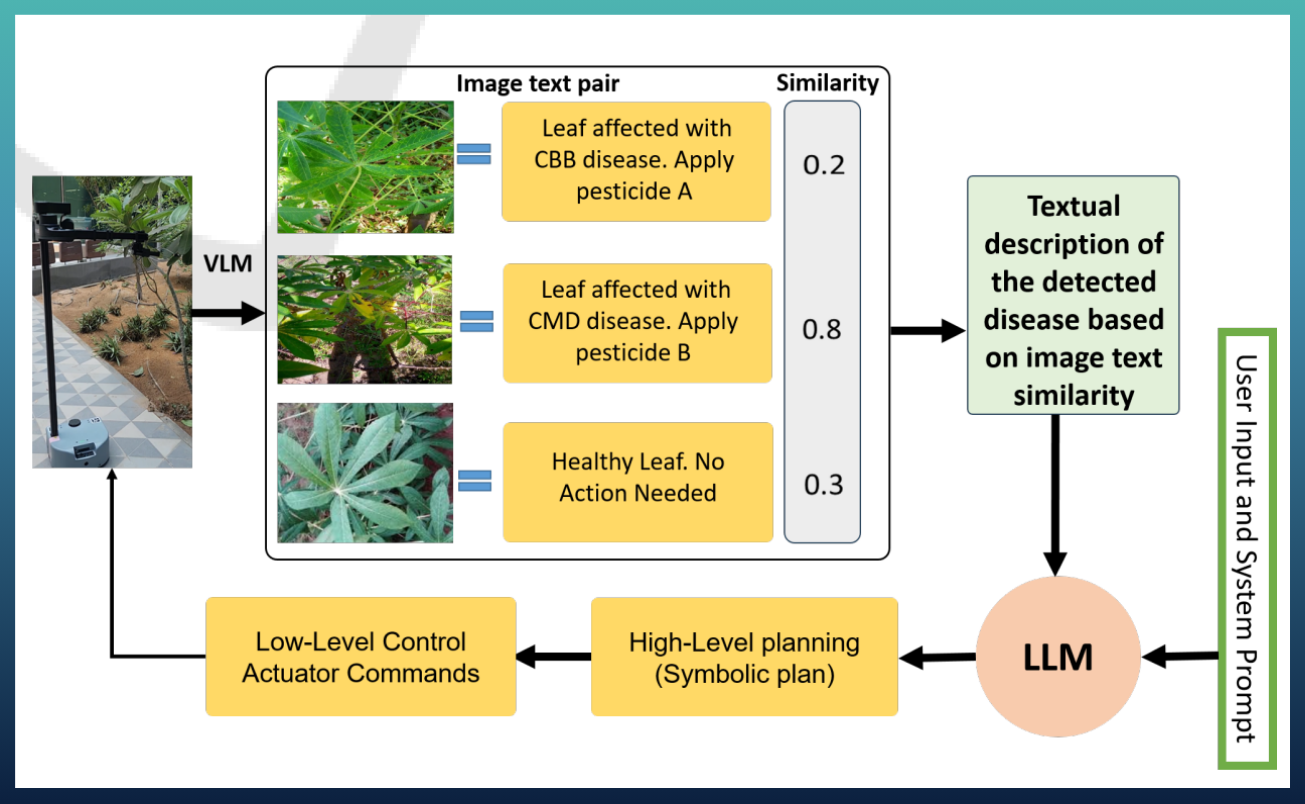

A new robotic pipeline, from the Khalifa University of Science and Technology, demonstrates how autonomous systems can detect and treat crop diseases, using cassava plants as a real-world example. The approach combines vision-language perception for identifying plant diseases with language-based planning to generate and execute treatment actions, creating a closed-loop pipeline from detection to intervention. Simulation and real-time evaluations demonstrate promising accuracy and precise execution, highlighting the potential of this modular, platform-agnostic system for scalable precision agriculture.

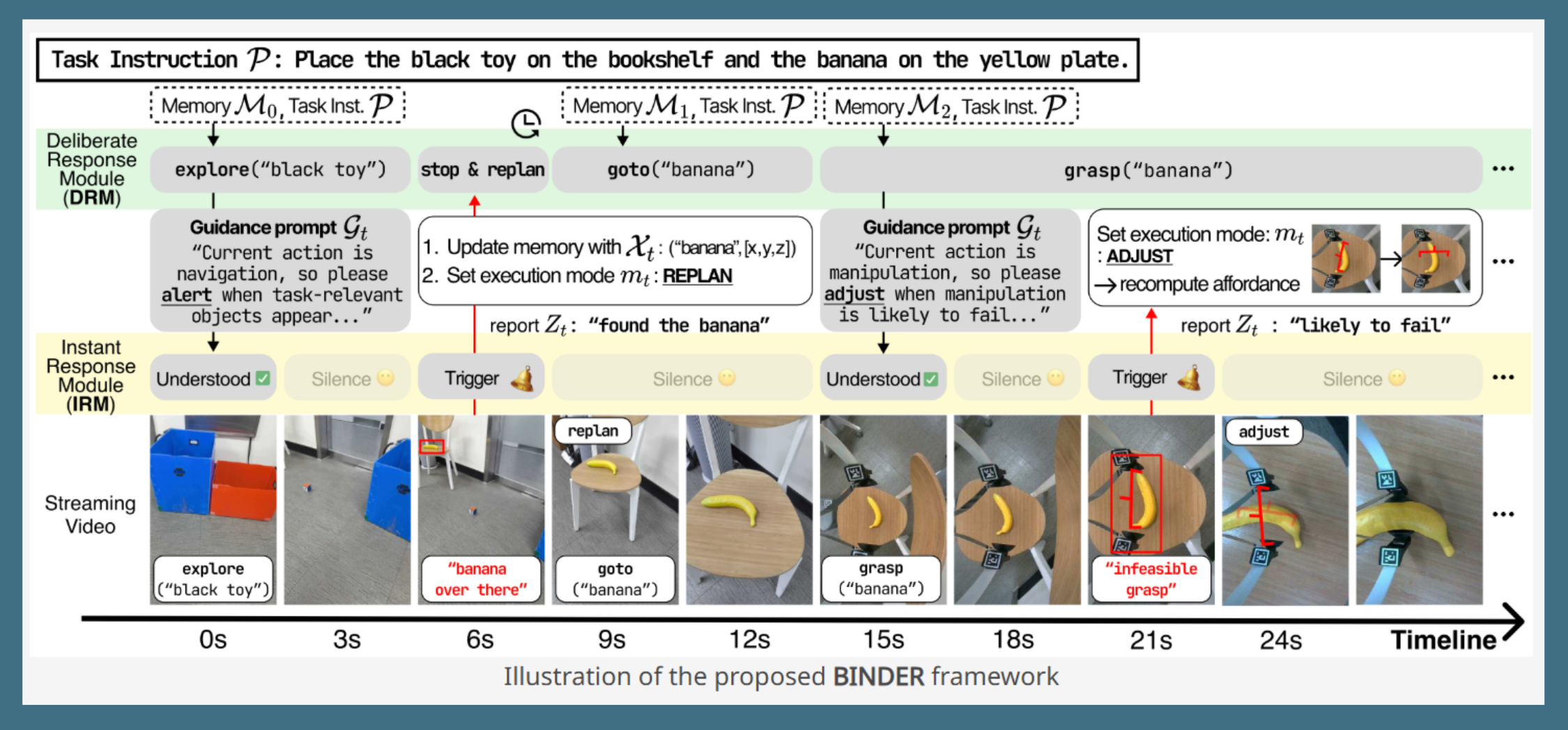

BINDER is a new framework, designed by researchers at Seoul National University, to help robots remain aware of their surroundings and adapt actions in real time while performing open-vocabulary manipulation tasks. By separating long-term task planning from continuous visual monitoring, the system allows robots to stay aware of changes in their surroundings and adapt actions in real time. Evaluations across multiple real-world environments show improved success and efficiency compared to existing methods, supporting more robust mobile manipulation in everyday settings.